Issue 3: Autumn 2024

| Sl No. | Article | Author |

|---|---|---|

| 1 | The Future of Human-Computer Interfaces | Tripti Manya (CSE/21/077) |

| 2 | Artificial Intelligence in Cybersecurity | Indrani Mondal (CSE/22/L-141) |

| 3 | Human vs Artificial Intelligence | Amritendu Das (CSE/21/114) |

| 4 | The Rise of Low-Code and No-Code Platforms | Abhinandan Banerjee (CSE/24/008) |

| 5 | Artificial Intelligence | Sushanta Samanta (CSE/24/026) |

| 6 | The Sky's the Limit: Exploring the Impact of Cloud Technology | Swati Jana (CSE/23/019) |

| 7 | Trash to Treasure: Revolutionizing E-Waste Management | Somoshree Pramanik (CSE/22/027) |

| 8 | The Impact of Automation on Future Job Markets | Sudip Ghosh (CSE/22/058) |

| 9 | Artificial Intelligence Aided Social Media and Adults | Subhrangsu Das (CSE/23/115) |

| 10 | Unlocking the Power of Computer Vision | Souradeep Manna (CSE/21/101) |

| 11 | Unpacking Space Tech for Everyday Life | Nisha Masanta (CSE/21/T-148) |

| 12 | The Intersection of Biotech and Tech | Priti Senapati (CSE/22/146) |

| 13 | The Rise of 5G | Deepanjan Das (CSE/21/044) |

| 14 | Edge Computing: A Paradigm Shift in Computing | Arghya Das (CSE/22/040) |

| 15 | The Internet of Things: Connecting the World Around Us | Mohammad (CSE/22/020) |

| 16 | The Rise of Voice Technology | Partha Sarathi Manna (CSE/22/004) |

| 17 | Augmented Reality: Blending the Virtual and Real Worlds | Usha Rani Bera (CSE/22/099) |

The Future of Human-Computer Interfaces

"An unlikely convergence of nature and technology"

Tripti Manya (CSE/21/077)

From Touchscreens to Neural Interfaces:

The way we interact with computers has changed a lot over time. From using buttons and keyboards to touchscreens and voice commands, technology keeps getting more advanced and easier to use.

Instead of using keyboards, mouse, or touchscreens, this technology allows us to control machines directly with our brain signals. It sounds like science fiction, but it is becoming a reality with advancements in neuroscience and computer technology.

But this technology could be a game-changer for many fields, including space exploration, where astronauts work in tough conditions and need the most advanced tools to succeed.

How Does It Work?

How Does It Work?

Neural interface technology, also called brain-computer interfaces (BCIs), work by detecting brain activity. When you think or imagine an action, your brain sends out electrical signals. These signals can be captured by sensors, either placed on the scalp or implanted in the brain.

The brain signals are then processed by a computer, which uses algorithms to decode the intended action or thought.

Once decoded, the signals are used to control a device, such as moving a robotic arm, typing on a virtual keyboard, or navigating a drone.

This seamless communication between the brain and machines can make interactions faster, more efficient, and more intuitive.

Applications of Neural Interface Technology:

The possibilities for BCIs are vast, and they are already making an impact in various fields:

Medical and Accessibility Tools:

BCIs are helping people with disabilities regain control over their lives. For instance, individuals with paralysis can operate wheelchairs or prosthetic limbs using only their thoughts. Similarly, BCIs allow patients with speech impairments to communicate through brain-controlled typing systems.

Gaming and Virtual Reality (VR):

Imagine playing a video game or experiencing a virtual world where your thoughts guide the action. BCIs can make gaming and VR experiences more immersive and lifelike by eliminating the need for traditional controllers.

Industrial and Military Applications:

In high-stress environments like factories or military operations, BCIs can enable hands-free control of complex systems, improving efficiency and reducing response time.

Brain Monitoring and Therapy:

BCIs are being used to monitor brain activity in real time to detect and treat neurological disorders like epilepsy or Parkinson’s disease. They are also being explored for mental health therapies, such as reducing anxiety or managing depression.

Imagine being able to control a robot or spacecraft just by thinking about it.

Here’s How Neural Interfaces Can Help in Space..

- Control Robots with Your Mind:

Astronauts could use BCIs to control robotic arms or rovers with incredible accuracy. For example, they could fix equipment on a satellite just by thinking about the movements.

- Health Monitoring:

BCIs could track brain activity to spot signs of tiredness, stress, or mental strain caused by long space missions. The system could suggest rest or adjust the workload automatically.

- Hands-Free Operation:

In zero gravity, where hands are often busy, BCIs would let astronauts operate spacecraft systems or perform experiments without needing to press buttons or use touchscreens.

- Remote Exploration:

Ground teams on Earth could control Mars rovers in real-time using BCIs, making exploration faster and more accurate.

Challenges and Limitations

While neural interface technology is promising, there are hurdles that researchers are working to overcome:

Signal Complexity: Brain signals are not straightforward. Decoding them accurately is a significant challenge.

Safety Concerns: Invasive (sensors implanted in the brain) BCIs involve surgery, which comes with risks. Non-invasive (sensors placed on the scalp) BCIs are safer but may not be as precise.

High Costs: The technology is expensive, making it less accessible for widespread use.

Ethical and Privacy Issues: BCIs deal with sensitive brain data, raising concerns about data misuse or hacking.

Despite these challenges, advancements in artificial intelligence (AI) and machine learning are helping improve the accuracy and reliability of BCIs, making them more practical for everyday use.

The Future of Neural Interfaces

As research progresses, neural interfaces are expected to integrate seamlessly into our daily lives. Here are some exciting possibilities:

Augmented Workplaces: Professionals could control software, machines, or robots more intuitively, improving productivity in industries like healthcare, engineering, and manufacturing.

Enhanced Communication: People with communication difficulties could use BCIs to convey thoughts directly, breaking down barriers caused by physical limitations.

Smarter Systems: Combining BCIs with AI could result in systems that adapt to human needs and predict actions based on brain activity, offering a more personalized experience.

"It might sound an IRONY but Humans are on the way to grow an artificial world."

The way we interact with computers has changed a lot over time. From using buttons and keyboards to touchscreens and voice commands, technology keeps getting more advanced and easier to use.

Instead of using keyboards, mouse, or touchscreens, this technology allows us to control machines directly with our brain signals. It sounds like science fiction, but it is becoming a reality with advancements in neuroscience and computer technology.

But this technology could be a game-changer for many fields, including space exploration, where astronauts work in tough conditions and need the most advanced tools to succeed.

Neural interface technology, also called brain-computer interfaces (BCIs), work by detecting brain activity. When you think or imagine an action, your brain sends out electrical signals. These signals can be captured by sensors, either placed on the scalp or implanted in the brain.

The brain signals are then processed by a computer, which uses algorithms to decode the intended action or thought.

Once decoded, the signals are used to control a device, such as moving a robotic arm, typing on a virtual keyboard, or navigating a drone.

This seamless communication between the brain and machines can make interactions faster, more efficient, and more intuitive.

The possibilities for BCIs are vast, and they are already making an impact in various fields:

BCIs are helping people with disabilities regain control over their lives. For instance, individuals with paralysis can operate wheelchairs or prosthetic limbs using only their thoughts. Similarly, BCIs allow patients with speech impairments to communicate through brain-controlled typing systems.

Imagine playing a video game or experiencing a virtual world where your thoughts guide the action. BCIs can make gaming and VR experiences more immersive and lifelike by eliminating the need for traditional controllers.

In high-stress environments like factories or military operations, BCIs can enable hands-free control of complex systems, improving efficiency and reducing response time.

BCIs are being used to monitor brain activity in real time to detect and treat neurological disorders like epilepsy or Parkinson’s disease. They are also being explored for mental health therapies, such as reducing anxiety or managing depression.

Imagine being able to control a robot or spacecraft just by thinking about it.

Here’s How Neural Interfaces Can Help in Space..

- Control Robots with Your Mind:

Astronauts could use BCIs to control robotic arms or rovers with incredible accuracy. For example, they could fix equipment on a satellite just by thinking about the movements. - Health Monitoring:

BCIs could track brain activity to spot signs of tiredness, stress, or mental strain caused by long space missions. The system could suggest rest or adjust the workload automatically. - Hands-Free Operation:

In zero gravity, where hands are often busy, BCIs would let astronauts operate spacecraft systems or perform experiments without needing to press buttons or use touchscreens. - Remote Exploration:

Ground teams on Earth could control Mars rovers in real-time using BCIs, making exploration faster and more accurate.

While neural interface technology is promising, there are hurdles that researchers are working to overcome:

Signal Complexity: Brain signals are not straightforward. Decoding them accurately is a significant challenge.

Safety Concerns: Invasive (sensors implanted in the brain) BCIs involve surgery, which comes with risks. Non-invasive (sensors placed on the scalp) BCIs are safer but may not be as precise.

High Costs: The technology is expensive, making it less accessible for widespread use.

Ethical and Privacy Issues: BCIs deal with sensitive brain data, raising concerns about data misuse or hacking.

Despite these challenges, advancements in artificial intelligence (AI) and machine learning are helping improve the accuracy and reliability of BCIs, making them more practical for everyday use.

As research progresses, neural interfaces are expected to integrate seamlessly into our daily lives. Here are some exciting possibilities:

Augmented Workplaces: Professionals could control software, machines, or robots more intuitively, improving productivity in industries like healthcare, engineering, and manufacturing.

Enhanced Communication: People with communication difficulties could use BCIs to convey thoughts directly, breaking down barriers caused by physical limitations.

Smarter Systems: Combining BCIs with AI could result in systems that adapt to human needs and predict actions based on brain activity, offering a more personalized experience.

"It might sound an IRONY but Humans are on the way to grow an artificial world."

Artificial Intelligence in Cybersecurity

Indrani Mondal (CSE/22/L-141)

As our digital world grows at an incredible pace, artificial intelligence (AI) is right at the heart of this change. Even though it's still in the early days for AI, it's already a big part of our everyday lives. From Siri and Alexa helping us out at home to businesses using AI for quick, smart decisions and handling loads of data—it's all around us, making things easier and more connected.

AI has the capability to create outputs so lifelike that distinguishing between what's real and what's artificial becomes challenging and it is changing the game in cybersecurity. It's quick to spot and stop threats, predicts issues before they happen and understands online behavior, making our digital world much safer.

- Threat Detection & Prediction

Predictive analytics powered by AI can anticipate emerging threats, providing early warnings that allow organizations to take preventive measures before an attack occurs. - Automated Incident Response

AI-driven automation streamlines the incident response process by quickly identifying and isolating threats. This reduces the time it takes to respond to security incidents, mitigating potential damage and minimizing downtime. - Cloud Security

AI enhances cloud security by monitoring cloud environments for suspicious activities and ensuring compliance with security policies. It helps organization reduce internal and external issues by employing different cloud environment security measures against threats. - Fraud Detection

In sectors like finance, AI is instrumental in detecting fraudulent transactions. AI systems can quickly identify and prevent fraudulent activities by analyzing patterns and behaviors that deviate from the norm, reducing financial losses and protecting sensitive data.

AI-powered security tools are revolutionizing how organizations approach cybersecurity. These tools use advanced algorithms to protect against various threats, from malware to phishing attacks.

Technical and Operational Challenges

- False Positives/Negatives: AI systems may produce false alarms or miss actual threats.

- Complexity and Interpretability: The intricate nature of AI models can make them difficult to understand and interpret, complicating troubleshooting and trust in automated decisions.

- Resource Intensive: Implementing and maintaining AI systems can require substantial computational resources and infrastructure, which may be cost-prohibitive for some organizations.

- Integration Challenges: Seamlessly incorporating AI into existing security infrastructure can be complex and may require significant adjustments or overhauls.

Ethical & Bias Issues

- Bias and Fairness: AI systems can inherit biases in their training data, leading to unfair or discriminatory outcomes that may disproportionately affect certain groups.

- Regulatory and Ethical Issues: The use of AI in cybersecurity raises various regulatory and ethical concerns, including data privacy and compliance with legal standards.

Skill & Knowledge Gaps

- Skill Gap: There is a shortage of professionals with the expertise needed to develop, manage, and secure AI-driven cybersecurity systems, which can limit their effectiveness.

- Data Privacy: Ensuring that AI systems handle sensitive data responsibly and in compliance with privacy regulations is a significant concern.

Artificial Intelligence (AI) is at the forefront of data protection, enabling organizations to safeguard sensitive information and prevent cyber threats with unparalleled efficiency. Here’s a look at companies leading the charge in integrating AI into cybersecurity.

- IBM : IBM Security QRadar

IBM uses AI to power its QRadar Security Information and Event Management (SIEM) system, which analyzes vast amounts of data to detect threats and reduce response times. Its AI-driven capabilities help organizations identify vulnerabilities, comply with regulations, and mitigate risks. - Microsoft: Microsoft Azure Information Protection (AIP)

Microsoft’s AIP uses AI to classify and protect data automatically based on its sensitivity. Integrated with Microsoft 365, it applies encryption, watermarks, and access controls to safeguard confidential files. - Google: Chronicle Security

Google’s Chronicle uses AI to process petabytes of security telemetry data, helping businesses identify and respond to threats efficiently. Its cloud-native platform is designed to scale for global enterprises. - Vectra AI:Cognito Platform

Vectra’s Cognito platform uses AI to analyze network traffic, detecting covert cyberattacks and data exfiltration attempts. It focuses on identifying threats within encrypted traffic without compromising privacy.

Artificial Intelligence (AI) is transforming cybersecurity by providing faster, smarter, and more efficient ways to detect and counter threats. Its ability to process vast amounts of data, recognize patterns, and adapt to new threats makes it indispensable in protecting sensitive information in an increasingly digital world.

Human vs Artificial Intelligence

Amritendu Das (CSE/21/114)

The clash and collaboration between human and artificial intelligence (AI) have been central to many discussions in technology and philosophy. While both humans and AI have problem-solving capabilities, their approaches, strengths, and limitations vary fundamentally. This article breaks down the distinctions, overlaps, and complementary roles that humans and AI play across different domains.

Human Intelligence (HI) refers to the cognitive abilities of humans, encompassing emotional intelligence, creativity, problem-solving, and social interaction. Human intelligence evolves over time and is shaped by factors such as culture, experience, education, and biological makeup. Artificial Intelligence (AI), on the other hand, is the replication of certain cognitive functions through machines and computer systems. AI systems are designed to process vast amounts of data, recognize patterns, and make decisions based on algorithms. While AI can mimic certain aspects of human intelligence, it is ultimately rooted in data and programmed algorithms, rather than experience or emotion.

Human intelligence has evolved over millions of years, equipping people with the capacity to adapt, think critically, and display empathy. Key strengths include:

2.1. Emotional Intelligence: Humans can understand, interpret, and manage emotions—both their own and those of others. This enables nuanced social interactions, empathy, and interpersonal skills that are crucial in collaborative settings and emotional contexts.

2.2. Creativity and Innovation: Human creativity is not limited by algorithms; it is fueled by curiosity, emotions, and complex experiences. This allows humans to imagine, innovate, and develop ideas beyond the data available, producing unique art, literature, and technological advances.

2.3. Critical and Abstract Thinking: Humans can draw conclusions based on limited information, make ethical judgments, and think abstractly. Critical thinking allows humans to analyze situations in a holistic manner, seeing nuances that may not be immediately apparent.

AI is rapidly transforming industries by automating repetitive tasks and enhancing data-driven decision-making. Its key strengths include:

3.1. Speed and Precision: AI can process and analyze enormous datasets in seconds, providing insights and solutions with a level of accuracy and speed that humans cannot achieve. This is particularly useful in fields like finance, healthcare, and cybersecurity.

3.2. Pattern Recognition: AI excels at identifying patterns within massive data sets, making it invaluable for applications like image recognition, language translation, and diagnostics. Machine learning, a subset of AI, allows systems to "learn" from data over time, improving their performance with more exposure.

3.3. Risk Management and Simulation: AI can simulate scenarios and predict outcomes based on historical data. This makes it valuable in fields such as weather forecasting, finance, and logistics, where analyzing past patterns is crucial for predicting future trends.

Despite the impressive capabilities of human intelligence, humans are limited by factors such as cognitive biases, emotional influences, and physical constraints. Humans can make errors due to fatigue, stress, or poor judgment and are prone to being influenced by emotions and biases.

Similarly, AI has significant limitations. It relies entirely on data, which means it lacks the context, intuition, and ethical considerations that humans bring to decision-making. AI cannot experience emotions or possess consciousness; it operates based on algorithms, lacking a true understanding of the information it processes. When faced with ambiguous situations or ethical dilemmas, AI may struggle to make judgments as humans would.

One of the most promising aspects of the relationship between human and artificial intelligence is their potential for collaboration. By combining human intuition and creativity with AI’s data-driven capabilities, we can achieve results that neither could accomplish alone.

5.1. Enhanced Decision-Making: In fields like healthcare, AI can analyze patient data to suggest diagnoses, while human doctors interpret these results, applying empathy, clinical judgment, and ethical considerations. This combination improves patient outcomes and reduces the chance of diagnostic errors.

5.2. Creative Assistance: AI tools in creative industries help artists, writers, and musicians explore new ideas by generating inspirations or suggesting improvements. However, the final, nuanced touch often remains with the human creator, ensuring the work resonates on an emotional level.

5.3. Data Analysis in Research: In scientific research, AI can analyze vast datasets to uncover patterns, while human scientists interpret the findings and direct research based on intuition and existing knowledge. This approach accelerates breakthroughs in areas like genomics, climate science, and drug discovery.

As AI becomes more integrated into daily life, ethical concerns arise regarding privacy, security, and autonomy. Issues include:

6.1. Data Privacy: AI relies on large amounts of data, raising questions about data ownership, consent, and privacy. Personal data used to train AI models can inadvertently expose individuals’ private information.

6.2. Employment and Automation: AI’s ability to automate tasks is a double-edged sword. While it increases efficiency, it also threatens job displacement in many industries, particularly in manufacturing and administration. Finding a balance between automation and employment is crucial for societal stability.

6.3. Accountability and Bias: AI systems, although designed to be neutral, can inherit biases from the data they are trained on. This can result in unfair treatment in areas such as hiring, law enforcement, and lending. Ensuring AI accountability and developing unbiased algorithms are essential steps forward.

The distinction between human and artificial intelligence is not about which is superior but rather about their complementary roles. Human intelligence brings intuition, creativity, and empathy to the table, while AI contributes speed, precision, and consistency. As we advance into an AI-driven future, fostering a harmonious relationship between the two is key to achieving sustainable progress and addressing complex global challenges.

To maximize the potential of both human and artificial intelligence, it is essential to cultivate a collaborative environment that respects ethical boundaries, protects human autonomy, and values the unique contributions of each. This partnership has the potential to propel innovation and solve problems in ways previously unimaginable. However, it is equally vital to address ethical challenges and ensure that AI serves humanity responsibly.

The Rise of Low-Code and No-Code Platforms: Revolutionizing Software Development

Abhinandan Banerjee (CSE/24/008)

Low-Code and No-Code (LCNC) platforms in the digital age have transformed software development into something easier, efficient, and accessible for users. They allow fewer coders to create applications for automating business processes and boosting productivity while bringing business and IT closer.

Low-Code platforms allow the development of application environments with minimal hand-coding. They work on a visual interface by providing drag-and-drop components where developers can fast track the application lifecycle. No-Code platforms, as their name goes, eliminates coding altogether. Their approach targets non-technical users, also known as "citizen developers," where the non-technical end user is able to develop simple applications.

The greatest advantage LCNC platforms offer is that they can deliver speed. While traditional software development might take months, LCNC platforms can cut the development time to days or weeks.

They also enable non-developers, decrease the load on IT departments, and are highly scalable, which makes them excellent for rapid prototyping and iterative design.

LCNC platforms have their downsides. The main issues include customizability and flexibility. Complex applications are destined to outgrow these features of the platform. Then only traditional coding can take them to their completion. Another major issue of LCNC is security; it can be problematic especially when built by non-professional individuals in industries concerning sensitive data.

The LCNC platform with AI and machine learning abilities will likely become stronger and more potent. Starting from automating routine, manual tasks to sophisticated analytics support, the future evolution of LCNC will promise more democratization in the way software is developed. Hence, this promises a tremendous opening of innovation in each industry.

Low-Code and No-Code are making a revolution in the thought process that we apply in building an application, bringing up opportunities and defining the future.

Artificial Intelligence

Sushanta Samanta (CSE/24/026)

AI is a computer system capable of thinking , learning and acting like a human. It is used for various purposes, such as:

- Data analysis: Quickly analyze large amounts of data to extract useful information.

- Problem solving: Apply complex math and logic to solve a variety of problem.

- Natural language processing: Understanding human language and using it to communicate with others.

- Image and video analysis: Extracting information from images and videos and trying to understand them.

- Robotics: Control the robot to perform various tasks.

AI works using different types of logarithms and models. These logarithms are trained with large amounts of data. Through training, AI learns how to react to any given situation.

Today AI is used in almost every aspect of our lives. Such as:

- Health sector: Diagnosis, drug discovery, treatment planning etc.

- Trade: Understanding customer needs, marketing, sales etc.

- Entertainment: Creating and recommending movies, games, music etc.

- Vehicle: Self-driving cars, drones, etc.

- Education: Facilitating the learning process of students.

AI is going to bring a big change in our life in coming days. These changes will affect the way we work, the way we communicate, health care and almost every aspect of our daily lives.

- Job changes: Many jobs can become active, leading to the extinction of many occupations. On the other hand, new types of jobs will also be created. People no longer have to do repetitive tasks, instead they can focus on more creative tasks.

- Change in communication: People will be able to communicate more easily with virtual assistants. Language translation will become easier, thereby increasing global communication.

- Transforming education: AI can make education more personalized. Each student can learn at his own pace and AI will provide learning materials according to his needs.

- Transforming healthcare: This can help with diagnosis, drug discovery and personalized treatment.

- Daily life: Smart homes, self-driving cars, and other smart devices will make our daily lives easier and more comfortable.

- Unemployment: Many people are likely to lose their jobs due to AI.

- Privacy: AI collects our personal information, which can be a threat to privacy.

- Ethics: Ethical issues related to the use of AI, such as autonomous weapons, and the bias of AI.

- Conclusion: AI is going to bring a huge change in our lives. These changes will being both opportunities and challenges. We need to be ready for this changes and find ways to use AI for the good of humanity.

The Sky's the Limit: Exploring the Impact of Cloud Technology

Swati Jana (CSE/23/019)

Cloud computing is a revolutionary technology that has transformed the store, access, and process data. It offers a flexible, scalable, and cost- solution for individuals and businesses of all sizes.

Cloud computing has rapidly become a cornerstone of digital transformation. In this article, we explore the fundamental models of cloud computing— Infrastructure as a Service (laaS), Platform as a Service (PaaS), and Software as a Service (SaaS)—and examine key trends such as edge computing, serverless architecture, and Al integration. By diving into real-world applications in healthcare, retail, finance, and more, we uncover how cloud technology is not only transforming today's business landscape but also shaping the future of innovation. This overview provides readers with a comprehensive understanding of cloud computing's potential, its current challenges, and the emerging developments on the horizon.

Cloud Computing: Revolutionizing Digital Transformation

In the digital era, cloud computing has become the backbone of many technologies, enabling flexibility, scalability, and cost-effectiveness. Let's explore what cloud computing is, its various types, advantages, and challenges, as well as key industry trends and real-world applications. Cloud computing is the delivery of computing services—including servers, storage, databases, networking, software, and intelligence—over the Internet, often referred to as "the cloud." Instead of owning and maintaining physical data centers and servers, businesses can rent cloud services, paying only for what they use, which allows them to scale resources up or down based on demand.

- Scalability

Cloud computing allows you to easily scale up or down your computing resources to meet fluctuating demands, ensuring optimal performance and cost-efficiency. - Cost Savings

By eliminating the need for on-premises hardware and IT infrastructure, cloud computing reduces capital expenditures and operational costs. - Accessibility

Cloud-based applications and data can be accessed from anywhere, at any time, enabling remote work, collaboration, and global connectivity.

- lnfrastructure as a Service (laaS)

Provides on-demand access to computing, storage, and networking resources, allowing users to build and manage their own IT infrastructure. - Platform as a Service (PaaS)

Offers a complete development and deployment environment, enabling users to build, test, and deploy applications without the need to manage the underlying infrastructure. - Software as a Service (SaaS)

Provides ready-to-use software applications that can be accessed through the internet, eliminating the need for installation and maintenance on local devices.

- Infrastructure as a Service (laaS):

What it is: laaS provides virtualized computing resources over the internet.

Examples: Amazon Web Services (AWS), Google Compute Engine, Microsoft Azure.

Use case: Ideal for businesses needing high flexibility without hardware ownership. It allows them to rent storage, networking, and servers on demand. - Platform as a Service (PaaS):

What it is: PaaS offers hardware and software tools (like databases, development tools, and operating systems) on the provider's infrastructure.

Examples: Google App Engine, Microsoft Azure App Services, Heroku.

Use case: Often used by developers, PaaS simplifies application deployment without managing the underlying infrastructure, facilitating rapid app development and scalability. - Software as a Service (SaaS):

What it is: SaaS allows users to access applications via the internet without managing or maintaining infrastructure.

Examples: Microsoft Office 365, Salesforce, Dropbox.

Use case: Commonly used by businesses for productivity, CRM, and collaboration applications. It reduces costs related to software installation, maintenance, and upgrades.

- Public Cloud: Services are hosted by third-party providers and shared among multiple users, making it costeffective and scalable. Examples include AWS, Google Cloud Platform, and Azure.

- Private Cloud: Exclusive to a single organization, private clouds offer greater control and security, often hosted on-premises or by third-party providers in a private data center.

- Hybrid Cloud: A combination of public and private clouds, hybrid setups enable organizations to balance flexibility and control, often using the private cloud for sensitive data and public cloud for less critical applications.

- Multi-Cloud: Multi-cloud refers to the use of multiple cloud services from different providers to prevent dependency on a single vendor, improve disaster recovery, and optimize performance.

- Edge Computing: With IOT and real-time data processing needs rising, edge computing processes data closer to the data source rather than relying solely on centralized cloud servers. This trend supports applications like autonomous vehicles and smart cities.

- AI and Machine Learning in the Cloud: Cloud platforms increasingly integrate Al services, making advanced analytics, natural language processing, and machine learning models accessible to organizations of all sizes.

- Serverless Computing: Serverless architecture allows developers to run code without managing infrastructure, automatically scaling resources based on workload. This approach lowers costs and simplifies application deployment.

- Healthcare: Medical institutions leverage cloud storage to maintain patient records, facilitate telemedicine, and use Al-driven diagnostics to improve patient care.

- Retail: Retailers utilize the cloud to enhance e-commerce platforms, personalize customer experiences, manage inventories, and analyze purchasing patterns to drive sales.

- Finance: Banks and financial institutions deploy cloud solutions for fraud detection, customer service chatbots, and to comply with regulatory standards on data security.

- Education: The cloud has enabled remote learning platforms, allowing students and educators worldwide to access educational resources, collaborate on assignments, and attend classes online.

- Entertainment and Media: Streaming services use cloud-based platforms to deliver video and audio content to millions of users, employing Al for recommendation engines and customer analytics.

Cloud computing is an enabler of modern digital transformation, offering flexibility, innovation, and cost efficiency. As industries continue to adopt cloud-based solutions, advancements in Al, IOT, and edge computing will further push the boundaries, making cloud computing an indispensable tool for the future. For businesses of all sizes, embracing the cloud is no longer optional—it's essential for staying competitive in today's fast-paced digital landscape.

Trash to Treasure: Revolutionizing E-Waste Management

Somoshree Pramanik (CSE/22/027)

The alarming growth of electronic waste (e-waste) generation has reached 50 million metric tons globally in 2020, with a 7.5% annual growth rate. This surge poses significant environmental and health risks due to toxic chemicals like lead, mercury, and cadmium. Effective recycling and disposal strategies are crucial to mitigate these challenges.

Technologies like Robotics, Artificial Intelligence, and Biotechnology optimize recycling processes and extract valuable materials. Global initiatives, including the Basel Convention, WEEE Directive, and UN's Sustainable Development Goals, address e-waste management. Effective e-waste management requires a multi-faceted approach. Individuals can adopt sustainable consumption practices, support recycling initiatives, and advocate for policy changes. Governments and manufacturers must implement EPR policies, invest in recycling technologies, and promote sustainable consumption.

The benefits of proper e-waste management are numerous. Recycling conserves natural resources, reduces energy consumption, and decreases greenhouse gas emissions. Additionally, it creates jobs and stimulates economic growth. Conversely, improper disposal leads to environmental pollution, health risks, and economic losses. E-waste recycling also presents opportunities for innovation. Closed-loop production, where materials are continuously cycled back into production, can significantly reduce waste. Biotechnology and nanotechnology can extract valuable materials from e-waste, creating new industries. Challenges persist, however. Lack of awareness, inadequate infrastructure, and limited regulations hinder e-waste management. Informal recycling practices, particularly in developing countries, pose health risks to workers and communities.

To overcome these challenges, collaboration is essential. Governments, manufacturers, and consumers must work together to develop and implement effective e-waste management strategies. Education and awareness campaigns can encourage responsible consumption and disposal practices. The future of e-waste management looks promising, with emerging technologies and innovative approaches. Advanced recycling technologies, biodegradable materials, and product design innovations hold potential. By embracing these solutions, we can mitigate e-waste's environmental and health impacts.

In conclusion, e-waste management requires immediate attention and collective action. By adopting sustainable practices, investing in recycling technologies, and promoting responsible consumption, we can ensure a safer, more environmentally conscious future.

The Impact of Automation on Future Job Markets

Sudip Ghosh (CSE/22/058)

Rapid advances in automation technology are fundamentally reshaping industries across the globe.

As industries demand more efficient people, lower operational costs, lower employee costs and improved productivity, industries have been harnessed to AI automation power. This has consequently had complex implications for the future job market – raising questions about job displacement, new career opportunities and the need for re-skilling.

The most immediate and visible impact of automation is job displacement. Workers involved in tasks that are usually routine or manual are at the highest risk of being replaced by automation. For example, industrial productivity, heavy industries like automobile manufacturing have already begun to be automated. Even more skilled occupations, such as data entry, customer service, are being done by automated machines, as a result of which these tasks can be completed more accurately and efficiently in much less time than human workers. It also has an impact on the medical sector as well. According to a 2019 report by the McKinsey Global Institute, about 800 million global workers could be displaced by automation by 2030.

But along with these automation challenges, they also open up new job opportunities. We just need to update ourselves with technology. As a result of using automation there are so many job opportunities in posts like AI Engineer, Data Analyst, Machine Learning Engineer, Robotics Engineer, Research Scientist, Project Manager, Business Development Engineer, Implementation Engineer, Software Engineer. Apart from this, this automation has the potential to create new jobs in the healthcare sector, agriculture sector, manufacturing industry and transport and storage. Technicians, engineers and developers will also be needed to build, maintain and improve automation systems. For this, workers must be reskilled and up-skilled in the future. According to a survey by Servicenow, an American company, automation will create a minimum of 5 to 7 lakh tech jobs in India in five years.

Automation has both positive and negative effects on the future job market. As workers become more engaged with the impact of automation, so do new career opportunities. Key to ensuring that workers benefit from automation will be proactive efforts to invest in education and training. Technological progress often creates new opportunities even as it disrupts old paradigms, and the key to success lies in our ability to adapt, learn, and evolve in response to this change.

Artificial Intelligence Aided Social Media and Adults

Subhrangsu Das (CSE/23/115)

We live in the era of digitization, where everything is aided by Artificial Intelligence enhancing the efficiency and speed of every algorithm and work process possible.

This has of course increased the work volume, speed, accuracy, and quality of work being done around but this applies to all kinds. It has been just as useful for people with the wrong intentions. It has been easier for people to use social media and Artificial intelligence to fool people who does not have that much of exposure or knowledge about these. This has led to a drastic increase in scams and an uncommon psychological trait, especially in adults. Adults aged forty and above has been more prone to this. But the people who are indulged in social media and have much of experience are not spared either and are suffering from different kind of problems which is affecting their personal life and suffer from psychological disorders which differ from the former age group.

Social media was intended as a web based, internet led virtual place to socialize with people without leaving their place and to let people know what are they doing or planning to do. It was meant to be a way of communication without having to physically meet people which was effective given that very often people were migrating from their native place and were unable to meet their loved ones. Now this has its own repercussions. Of course there is a difference between conversing virtually, like a video call, and physically meeting and having a quality time, which is you barely can judge the body language to make sure if they are telling the truth. They may be lying or hiding something which we are unable to make a difference of. This can lead to confusion, chaos and may create a void of falsehood and betrayal. Here enters the people who use this as their advantage to scam and fool people. It is very common nowadays to find scams in every part of a social media app.

The scams running in this era of social media are endless and never-ending in innovation. Every now and then a new technique is deduced to take advantage of existing loopholes. Some of them very common nowadays and may happen to you and the fact that you will fall for it is quite certain because of how well they are carried out and look legit. One of few are falsely accusing one of your members of your family of a crime that they never did. But they make it look so real and even produce a valid proof such as their voice note crying for your help which compels you to comply to them and decide in haste, and it is obvious that decisions made in haste are logically inferior and hence they charge a hefty amount from you. And yes, most of these scams are carried out for money. Another famous incident happened recently which shows how ignorance can put you in a trap, and mind you I am not talking about some uneducated chap who does not know about things work, but a highly esteemed citizen, S P Oswal who is the chairperson of Vardhman group who is also a Padma Bhushan awardee. So, he fell into a trap of few fraudsters and scamsters who claimed to be from the CBI and claimed his Aadhar card was misused in a case involving fake passports and financial frauds and they put him under an "online arrest", which is not a legal or even a real way how law woks in our country. But unaware of this Oswal obliged and had to pay a hefty sum of 7 crore rupees to get free of those charges. There is more to these scams, nowadays a new scam has prevailed in which you will receive an invitation card to some marriage ceremony in the form of an apk file i.e. an application to download. The moment you download the file, the security of your device is compromised and all sensitive details like your passwords and bank details is delivered to the source. These scamsters’ targets ranges from young to old, ignorant to tech expert. No one is exception for them but of course older people and people with less knowledge fall for these easily.

Social media has now become a place mostly to show off and boast their lavish lifestyle which attracts a lot of followers but which may or may not be true. People post a very small part of their scripted so-called perfect lifestyle to make them look happy and successful. While their lives may be completely opposite. These so-called influencers will post their perfect relationship while may be accused or a victim of domestic violence, they be showing how successful and rich they are while all the cars and houses they show would be staged and rented.

These attract the people who want to live a lifestyle like this and hence follow them and deep down they become depressed that they cannot achieve the lifestyle while others can. This has led to the increase in depression among teens and a new term called "hustle culture" where these influencers claim to be working an impossible amount of time to be successful which again causes fatigue and depression.

Also, after a new genre called "reels" which refer to short form videos has extensively damaged the attention span of the mass. It has been concluded that due to these tactics by social media giants the average attention span of a person has fallen to just 8 seconds. This is a huge deterioration among the students and hence affect the education quality of students. This is how dangerous social media now.

After the introduction and implementation of AI in these social media it has become more easier to carry out these scams, fake lifestyles, fake results to easily clump up views and people. AI indeed has helped a lot in these negative aspects of the society too. So, we need regulations on both corporate and personal level to safeguard us from these harmful effects of Artificial Intelligence aided social media.

Unlocking the Power of Computer Vision

Souradeep Manna (CSE/21/101)

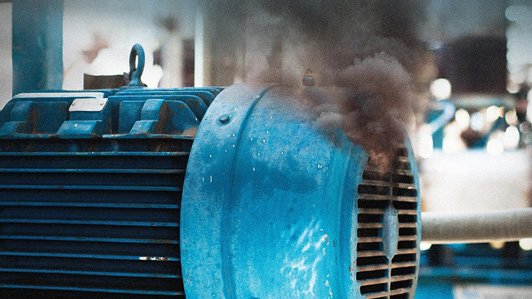

In the intricate world of modern machinery, where complex systems are essential for daily operations, the potential for malfunction is a persistent concern.

A flickering light, a mysterious beep, or an unexpected shutdown can disrupt operations, leading to downtime, costly repairs, and productivity losses. Enter the Maintenance Error Decision Aid (MEDA): a digital detective that assists in diagnosing and solving these mechanical mysteries. MEDA combines advanced diagnostic techniques, machine learning, and expert systems to help technicians identify and resolve the root causes of equipment failures swiftly and accurately. This paper explores the mechanics of MEDA, its advantages, applications, and future prospects, demonstrating how it is transforming industrial maintenance practices.

Modern industrial systems rely on complex machinery and automated processes, making maintenance and troubleshooting an essential part of their operations. While traditional maintenance strategies often involve routine checks or reactive repairs, the need for more intelligent, data-driven methods has become increasingly apparent. MEDA, or Maintenance Error Decision Aid, is an innovative diagnostic tool that acts as a "digital Sherlock Holmes" for machinery, solving the puzzles of mechanical failures by analyzing vast amounts of operational data.

By integrating machine learning, artificial intelligence (AI), and expert systems, MEDA offers an efficient, systematic approach to diagnosing faults, offering valuable decision support to technicians, and enabling proactive maintenance strategies. This paper delves into how MEDA functions, its core components, benefits, and future prospects in the field of predictive maintenance.

Just as a detective solves a case by collecting clues and analyzing them, MEDA operates as a sophisticated diagnostic system that identifies the root cause of malfunctions in industrial machinery. MEDA works by gathering data from various sources, applying deductive reasoning, and providing actionable recommendations for repairs or improvements.

2.1 Data Acquisition

The first step in MEDA’s diagnostic process involves collecting extensive data from multiple sources. This includes:

- Sensor Data: Real-time data from sensors embedded in machinery, monitoring parameters such as temperature, pressure, vibration, and speed.

- Historical Maintenance Records: Past maintenance logs that document previous issues, repairs, and part replacements.

- Operator Logs: Insights into how the machine has been operated, including any unusual occurrences or manual interventions.

- Real-time Video Feeds: If available, video footage or visual inspection data can be fed into the system to detect visible signs of damage or wear.

By analyzing this wealth of data, MEDA can identify patterns and anomalies that could signal the onset of a problem.

2.2 Expert System Integration

Once data is collected, MEDA taps into a knowledge-based expert system that contains a vast repository of information. This includes:

- Troubleshooting Guidelines: Predefined steps for diagnosing common issues with specific equipment.

- Fault Trees: Flowcharts or decision trees that help trace the root cause of specific malfunctions.

- Expert Advice: Recommendations and best practices based on years of accumulated industry expertise.

By cross-referencing the real-time data with this expert knowledge, MEDA is able to hypothesize potential causes of the malfunction. This process is akin to a detective piecing together clues to form a coherent narrative of the failure.

2.3 Decision Support

MEDA doesn’t stop at identifying the issue; it goes a step further by providing actionable recommendations for addressing the problem. These may include:

- Repair Procedures: Detailed instructions on how to resolve the malfunction, ensuring the correct steps are taken.

- Replacement Parts: Recommendations for specific parts that may need to be replaced, streamlining the procurement process.

- Preventive Maintenance Tasks: Suggestions for tasks that should be completed to avoid similar issues in the future, contributing to the overall longevity of the machine.

These insights empower technicians to act efficiently, reducing downtime and minimizing the need for additional troubleshooting.

The implementation of MEDA offers several significant advantages to industries that rely on complex machinery. These benefits span across efficiency, cost savings, safety, and operational continuity.

3.1 Improved Efficiency

One of the primary advantages of MEDA is the reduction in troubleshooting time. Instead of manually inspecting the equipment or running tests, MEDA automates much of the diagnostic process. By quickly analyzing vast amounts of data, it allows technicians to focus on more complex issues, enhancing overall operational efficiency.

3.2 Reduced Downtime

In industrial environments, downtime can lead to significant financial losses. MEDA helps reduce downtime by providing faster and more accurate diagnostics. With quicker identification of issues and targeted repairs, equipment is restored to optimal performance levels faster, minimizing disruptions to operations.

3.3 Enhanced Safety

MEDA plays a crucial role in improving workplace safety. By detecting issues such as faulty wiring, overheating components, or unusual vibrations that could lead to catastrophic failures, it helps prevent accidents. Early detection of safety hazards can significantly reduce the risk of workplace injuries or catastrophic failures.

3.4 Optimized Maintenance

With the ability to predict potential failures, MEDA enables predictive maintenance strategies. By analyzing patterns in historical and real-time data, it can forecast when a component is likely to fail, allowing for maintenance to be scheduled before the problem occurs. This proactive approach helps extend the lifespan of machinery and reduces the need for costly emergency repairs.

3.5 Cost Savings

The combined effects of improved efficiency, reduced downtime, and optimized maintenance result in significant cost savings. MEDA helps organizations avoid the high costs associated with unscheduled repairs, excessive downtime, and the need for replacement parts due to late intervention. Moreover, by improving decision-making and reducing the number of unnecessary interventions, it contributes to overall cost-effectiveness.

As the technological landscape continues to evolve, MEDA is poised to become an even more powerful tool. The integration of emerging technologies, such as AI and machine learning, will allow MEDA to continuously improve its diagnostic accuracy and predictive capabilities.

4.1 Predictive Maintenance

The future of MEDA lies in its ability to predict equipment failures before they happen. By analyzing historical data and monitoring real-time sensor data, MEDA can forecast when a machine or component is likely to fail. This predictive capability allows for timely maintenance and part replacement, further minimizing the risk of unplanned downtime.

4.2 AI Integration

Incorporating Artificial Intelligence (AI) into MEDA will enhance its diagnostic capabilities. AI algorithms can learn from past experiences, adapting to new situations and improving over time. With AI, MEDA could offer even more accurate diagnoses and tailor its recommendations to specific machines, environments, or operational conditions.

4.3 Remote Monitoring and Diagnostics

As more machines become connected through the Internet of Things (IoT), MEDA’s diagnostic capabilities will expand to include remote monitoring. This will allow technicians to diagnose and troubleshoot equipment located in remote or hard-to-reach locations, without needing to be physically present. Such advancements will be particularly valuable for industries operating in geographically dispersed areas or in hazardous environments where safety is a concern.

MEDA represents a significant leap forward in the maintenance and repair of industrial equipment. By harnessing the power of data and machine learning, it enables more efficient diagnostics, faster repairs, and predictive maintenance strategies. MEDA is not just a tool for identifying problems; it’s a strategic asset that helps organizations achieve greater reliability, lower operational costs, and enhanced safety.

With the continued advancement of AI, predictive analytics, and IoT technologies, MEDA is poised to revolutionize the way we maintain and repair our machines. As industries become more dependent on complex, interconnected systems, MEDA’s role in ensuring smooth and efficient operations will only grow in importance.

In conclusion, MEDA is a transformative technology that acts as a digital Sherlock Holmes for the industrial world. By diagnosing and resolving mechanical issues quickly and efficiently, it minimizes downtime, optimizes maintenance, and reduces costs. As AI, predictive maintenance, and remote monitoring continue to evolve, MEDA will undoubtedly become an essential tool in the maintenance arsenal of industries worldwide.

Unpacking Space Tech for Everyday Life: How Space Innovations Shape Our World

Nisha Masanta (CSE/21/T-148)

Space travel remains a distant aspiration for many; however, space technology is already playing a significant role in our everyday lives.

While the notion of space exploration evokes images of rockets launching toward far-off planets, the technologies involved often have far more practical and beneficial applications.

Space exploration is not solely about rockets and astronauts; it is also about enhancing life on Earth in ways that frequently go unnoticed. Numerous everyday items we depend on originate from technologies initially developed for space missions.

For instance, GPS— which assists us in navigating our surroundings—was created using satellites designed for outer space. Memory foam mattresses, scratch-resistant glasses and even cordless tools all originated as solutions for astronauts.

Health instruments such as MRI scanners and fitness trackers also trace their origins back to space technology, as do water filters that ensure access to clean drinking water. Moreover, renewable energy benefits from advancements made in space, with solar panels initially enhanced for spacecraft now contributing to a more sustainable lifestyle.

Satellites not only connect us via the internet, but they also monitor weather patterns, track disasters and study climate change. Space exploration may appear remote; however, its advantages are palpable. Each time we utilize these technologies, we’re reminded that striving for the stars contributes to improving life here on Earth. Although the cosmos is far away, this pursuit enhances our everyday existence. Because of this, the connection between space and our world becomes increasingly evident.

Space technology is not just about exploring distant galaxies; it's about improving life here on Earth. Every breakthrough in the space industry has the potential to make our world more connected, sustainable, and innovative. As we continue to push the final frontier, the benefits of space exploration will undoubtedly reach deeper into our everyday lives, proving that the sky is no longer the limit—it’s just the beginning.

The Intersection of Biotech and Tech

Priti Senapati (CSE/22/146)

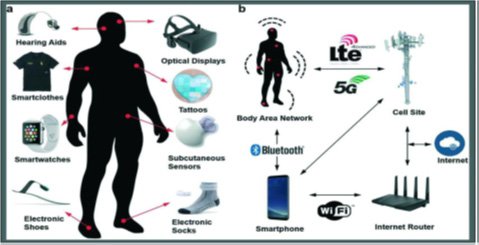

The convergence of biotechnology and technology is unlocking new frontiers in healthcare, with innovations in health monitoring, personalized medicine, and gene editing transforming the way we prevent, diagnose, and treat diseases. By leveraging advanced technologies such as artificial intelligence (AI), wearable devices, and genetic engineering tools, scientists and medical professionals are now able to develop highly personalized, precision-based treatments and interventions.

This article explores how the fusion of biotech and tech is reshaping modern healthcare, with a particular focus on innovations in health monitoring, the emergence of personalized medicine, and breakthroughs in gene editing technologies like CRISPR.

One of the primary areas of intersection is health monitoring, where wearable devices, such as smartwatches and biosensors, have become increasingly advanced. These devices now use biotechnology for continuous, real-time tracking of vital health metrics like heart rate, glucose levels, and blood oxygen, combined with AI for predictive analytics. These technologies provide individuals with personalized health data and enable early detection of potential health issues.

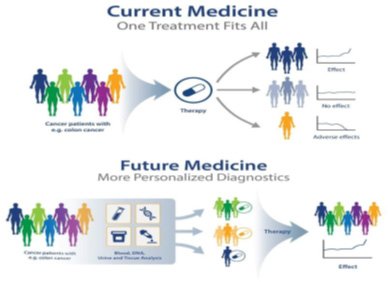

Personalized medicine is another significant impact of the biotech-tech convergence. By leveraging genomic data, doctors can develop custom treatment plans tailored to an individual’s genetic profile. AI and machine learning algorithms analyze vast amounts of genetic information to identify disease markers and predict how patients will respond to specific therapies. This approach is particularly impactful in oncology, where treatments are becoming more targeted based on a patient’s unique tumor biology.

Gene editing, particularly with tools like CRISPR (Clustered Regularly Interspaced Short Palindromic Repeats), represents one of the most revolutionary areas where biotech and tech intersect. The ability to make precise changes to DNA holds the potential to cure genetic diseases, improve resistance to infections, and even enhance certain physical traits.

Biotechnology’s advances in genomics and molecular biology, combined with AI’s ability to process complex data sets, allow for faster identification of potential drug candidates and the design of more effective treatments. These technologies are reducing the time and cost associated with drug development, leading to quicker breakthroughs in therapies for diseases such as cancer, Alzheimer’s, and rare genetic disorders.

It addresses the ethical considerations and regulatory challenges associated with the intersection of biotech and tech. It raises concerns about data privacy, especially with genomic information, as well as the ethical implications of gene editing, particularly with regard to germline editing and designer babies. Regulatory bodies face the challenge of keeping up with rapid technological advancements to ensure these innovations are deployed safely and equitably.

In conclusion it is ushering in a new era of healthcare innovation, with transformative advancements in health monitoring, personalized medicine, and gene editing, Drug Development. Wearable health devices and AI-powered monitoring systems are enabling more personalized and proactive care, while genomics and AI are making personalized treatments a reality for patients across the globe. At the same time, CRISPR and other gene-editing technologies hold immense promise for curing genetic diseases and improving human health on a molecular level.

However, these advances also raise important ethical, regulatory, and societal questions. As these technologies continue to evolve, it will be crucial to balance innovation with responsibility, ensuring that they are used safely and equitably. Ultimately, the fusion of biotech and tech is set to revolutionize the future of medicine, creating a more precise, individualized, and efficient healthcare system that has the potential to improve lives worldwide.

The Rise of 5G

Deepanjan Das (CSE/21/044)

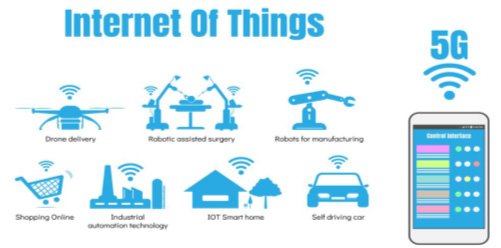

The advent of 5G technology marks a pivotal moment in the evolution of wireless communication, promising faster speeds, lower latency, and the ability to support a vast number of connected devices. This next-generation network is expected to drive significant advancements across multiple industries, including the Internet of Things (IoT), smart cities, and telecommunications. With its ability to handle high bandwidth and low-latency communication, 5G is poised to enable a new wave of innovation, transforming how we live, work, and interact with technology.

The article discusses how 5G will play a central role in the growth of IoT by providing the necessary infrastructure to connect billions of devices seamlessly. The ultra-low latency and high bandwidth of 5G will allow IoT devices—from smart home products to industrial sensors—to communicate in real time, driving automation, efficiency, and improved decision-making across industries like manufacturing, healthcare, and logistics.

5G is expected to be a critical enabler for smart cities, offering the high-speed connectivity needed for technologies such as autonomous vehicles, intelligent traffic systems, smart grids, and public safety networks. The article highlights how 5G will improve urban infrastructure management, reduce congestion, and optimize resource use, making cities more efficient, sustainable, and livable.

As telecom providers roll out 5G networks, the telecommunications industry is undergoing a transformation. The article examines how telecom companies are investing heavily in 5G infrastructure to meet the demand for faster speeds and more reliable services. It also discusses the challenges they face, such as the need for spectrum allocation, upgrading existing infrastructure, and navigating regulatory hurdles.

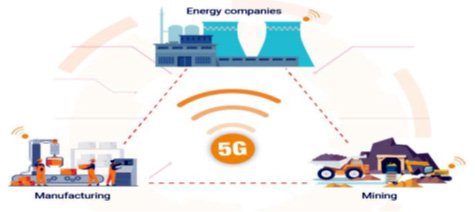

The article explores how 5G will accelerate digital transformation in various industries. In sectors like manufacturing, healthcare, and logistics, 5G will enable real-time data exchange, predictive maintenance, remote healthcare services, and smart supply chain management, leading to improved productivity and efficiency.

The widespread adoption of 5G presents several challenges, including the high costs of infrastructure deployment, security concerns, and regulatory issues. The article discusses the complexities telecom providers face in upgrading infrastructure, securing enough spectrum, and ensuring the smooth rollout of 5G services worldwide.

The article highlights the global race to deploy 5G, with countries like the U.S., China, and South Korea leading the way. It discusses how the competition between telecom giants will drive innovation and shape the future of the global telecommunications market. Additionally, it addresses the economic opportunities 5G brings, particularly in new business models and services.

With the increase in connected devices and data traffic, 5G raises concerns about data privacy and cybersecurity. The article examines the potential risks associated with massive data flows and the need for enhanced security protocols to protect personal and sensitive information in an increasingly connected world.

The article looks at the long-term potential of 5G, predicting that it will be a key enabler for next-generation technologies like augmented reality (AR), virtual reality (VR), and artificial intelligence (AI). These technologies, combined with the high-speed, low-latency capabilities of 5G, will open up new possibilities for industries like entertainment, education, and gaming.

The rise of 5G is set to reshape the telecommunications landscape and transform industries by enabling faster, more efficient communication and the seamless connection of billions of devices. Its impact on IoT and smart cities will drive innovation, create new business opportunities, and improve the quality of life in urban environments. However, the successful deployment of 5G requires overcoming significant challenges, including infrastructure investment, spectrum allocation, data security, and regulatory issues. As 5G continues to roll out worldwide, it will pave the way for new technological advancements and business models that will shape the future of connectivity. The full potential of 5G can only be realized with careful planning, collaboration, and attention to ethical and security considerations, ensuring that its benefits are widely distributed and responsibly managed.

Edge Computing: A Paradigm Shift in Computing

Arghya Das (CSE/22/040)

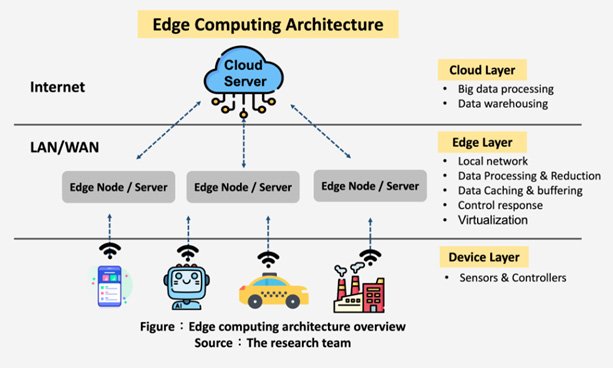

Edge computing brings computation and data storage closer to the source, reducing latency, improving response times, and enhancing privacy. This report explores the core concepts, benefits, challenges, and applications of edge computing, including IoT, autonomous vehicles, and industrial automation.

The advent of the Internet of Things (IoT) has ushered in an era of unprecedented data generation. As billions of devices connect to the internet, the volume and velocity of data are soaring. Traditional cloud computing, while powerful, often struggles to handle the latency and bandwidth demands of real-time applications. To address these challenges, edge computing has emerged as a transformative technology, bringing computation and data storage closer to the source of data generation.

Edge computing is a distributed computing paradigm that pushes computation and data storage closer to the source of data generation. By processing data at the edge of the network, it reduces latency, improves response times, and enhances privacy and security.

- Reduced Latency: By processing data locally, edge computing eliminates the need to transmit data over long distances to centralized data centers. This significantly reduces latency, enabling real-time applications and decision-making.

- Improved Response Times: Faster processing and reduced latency lead to improved response times, critical for applications like autonomous vehicles, industrial automation, and augmented reality.

- Enhanced Privacy and Security: Processing sensitive data at the edge minimizes the risk of data breaches and unauthorized access. By limiting the amount of data transmitted to the cloud, organizations can enhance data privacy.

- Reduced Bandwidth Usage: Edge computing can significantly reduce the amount of data transmitted to the cloud, optimizing network bandwidth and reducing costs.

- Increased Reliability: By distributing computing resources across multiple edge devices, edge computing can improve system reliability and fault tolerance.

- Edge Devices: These devices generate and collect data, such as sensors, IoT devices, and mobile phones.

- Edge Servers: These servers process and store data locally, reducing the load on the cloud.

- Cloud Infrastructure: The cloud provides centralized management, storage, and analytics capabilities.

- Internet of Things (IoT): Edge computing enables real-time analysis of IoT data, optimizing device performance and enabling predictive maintenance.

- Autonomous Vehicles: By processing sensor data at the edge, autonomous vehicles can make rapid decisions, improving safety and efficiency.

- Industrial Automation: Edge computing empowers industries to optimize production processes, reduce downtime, and improve quality control.

- Healthcare: Edge computing can enable real-time patient monitoring, remote diagnosis, and telemedicine, improving healthcare delivery.

- Virtual and Augmented Reality (VR/AR): By reducing latency and processing data locally, edge computing enhances the user experience in VR and AR applications.

- Security and Privacy: Protecting sensitive data at the edge requires robust security measures.

- Power and Energy Constraints: Edge devices often have limited power and energy resources.

- Management and Orchestration: Managing and orchestrating a distributed network of edge devices can be complex.

- Network Latency and Bandwidth Limitations: Ensuring reliable and low-latency connectivity between edge devices and the cloud is crucial.

Edge computing is a powerful technology that has the potential to revolutionize the way we process and analyze data. By bringing computation and data storage closer to the source, edge computing can unlock new opportunities and address the challenges of the data-driven era.

- https://en.wikipedia.org/wiki/Edge_computing

- https://www.fsp-group.com/en/knowledge-app-42.html

- https://www.ibm.com/topics/edge-computing#:~:text=Edge%20computing%20is%20a%20distributed,times%20and%20better%20bandwidth%20availability.

- https://aws.amazon.com/what-is/edge-computing/

The Internet of Things: Connecting the World Around Us

Mohammad (CSE/22/020)

The Internet of Things (IoT) is changing the way we interact with the world, making everyday objects smarter and more connected. By linking devices to the internet, IoT allows them to communicate with each other, making our lives more convenient and efficient. From smart homes to industrial machines, IoT is impacting nearly every aspect of modern life.

At its core, IoT is about adding sensors and internet connectivity to everyday objects so they can share data. This includes everything from smart fridges and lightbulbs to machines in factories and cars. These devices can make decisions and respond to information automatically, which means they don’t always need human input to work effectively.

A great example of IoT in action is the smart home. Devices like smart speakers, thermostats, and lighting systems can be controlled through voice commands or apps on your phone. Not only do these gadgets make life easier, but they also help save energy by adjusting to your needs throughout the day.

IoT is also having a big impact in fields like healthcare. Wearable devices like fitness trackers or smartwatches can monitor things like heart rate and activity levels, and even alert doctors or family members if something seems wrong. In farming, IoT tools can track things like soil quality or crop health, helping farmers grow food more efficiently and sustainably.

Even industries like manufacturing and transportation are getting smarter thanks to IoT. Machines in factories can monitor their own performance, predicting when they need maintenance before they break down. In cities, IoT is being used to manage traffic, improve public services, and make infrastructure more efficient.

While IoT is already making our world smarter, it’s only going to keep growing. As more devices become connected, we’ll see even more ways that IoT can improve daily life, reduce waste, and make the world a little more efficient. The future of IoT is bright, and it’s exciting to think about all the new possibilities it could bring.

The Rise of Voice Technology: Changing How We Interact with Machines

Partha Sarathi Manna (CSE/22/004)

Voice technology has quickly become a cornerstone of modern convenience, transforming how we interact with devices and services. From asking Alexa to play your favorite playlist to dictating messages on your smartphone, voice-powered systems have integrated seamlessly into our daily routines. As these systems continue to evolve, they are not just making technology more intuitive but also more inclusive and accessible.

Voice recognition technology has undergone remarkable advancements. What began as limited systems with restricted vocabulary has transformed into sophisticated tools powered by artificial intelligence (AI) and natural language processing (NLP). Today, voice assistants like Siri, Google Assistant, and Amazon Alexa are capable of understanding complex queries, providing real-time information, and even learning from user behavior to offer personalized experiences.

Voice technology is revolutionizing many aspects of life. Voice commands enable users to control lighting, thermostats, and home security systems, offering a new level of convenience and energy efficiency. Assistants can manage calendars, set reminders, and even draft emails, helping users save time and stay organized. In healthcare, voice-controlled devices allow hands-free operation and monitor health metrics like sleep patterns and heart rate. Students benefit from personalized learning tools that respond to their queries instantly, enhancing the learning experience.

Perhaps one of the most significant contributions of voice technology is in promoting inclusivity. For individuals with visual or mobility impairments, voice interfaces provide an essential tool for accessing technology independently. Similarly, real-time language translation through voice tools helps bridge communication gaps, fostering understanding across different cultures.

Despite its many benefits, voice technology faces hurdles. Concerns around privacy and data security persist, as voice assistants often record conversations to improve performance. Additionally, achieving consistent accuracy with diverse accents and languages remains a technical challenge.

Looking ahead, the future of voice technology is promising. As AI algorithms improve and systems become more refined, we can expect even greater integration of voice interfaces in industries such as healthcare, education, and entertainment. With continued innovation, voice technology will not only make interactions with machines more natural but also empower individuals and enhance global connectivity.

Voice technology is more than a convenience—it represents a fundamental shift in how we communicate with the digital world. By addressing its challenges and exploring its potential, we can unlock a future where technology truly speaks our language.

Augmented Reality: Blending the Virtual and Real Worlds

Usha Rani Bera (CSE/22/099)

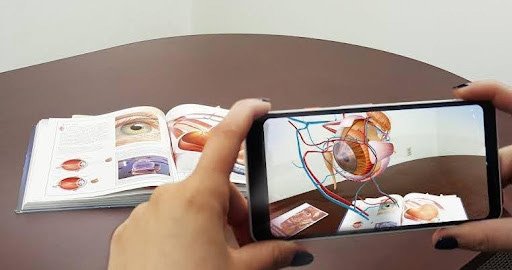

Augmented Reality (AR) is transforming the way we perceive and interact with the world around us. Unlike Virtual Reality (VR), which immerses users in entirely digital environments, AR overlays virtual elements onto the real world, creating a seamless blend of physical and digital experiences. This technology, once confined to science fiction, is now being applied in various industries, redefining the boundaries of innovation and practicality.

AR's rise can be attributed to advancements in smartphones, smart glasses, and machine learning. Popular applications like Pokémon GO introduced millions to the potential of AR, showcasing its ability to create engaging and interactive experiences. Today, AR extends far beyond gaming, finding utility in fields such as education, healthcare, retail, and manufacturing.

In education, AR transforms traditional learning methods by making lessons more interactive and immersive. Imagine history students exploring ancient ruins through AR or medical students visualizing human anatomy in 3D. By bringing concepts to life, AR enhances understanding and engagement. Similarly, in healthcare, AR is proving to be a game-changer. Surgeons use AR to visualize complex procedures, overlaying critical data directly onto the patient in real time, increasing precision and safety.

The retail sector is also embracing AR to revolutionize the shopping experience. Virtual fitting rooms allow customers to try on clothes without stepping into a store, while furniture brands like IKEA offer AR apps that let users visualize how products will look in their homes. This not only improves convenience but also reduces the uncertainty of online shopping.

In manufacturing and construction, AR aids workers by overlaying blueprints, instructions, or safety guidelines onto machinery or building sites. This streamlines processes, reduces errors, and enhances productivity. AR's ability to provide real-time information directly within a worker’s field of view is driving a new era of efficiency and innovation.

However, the widespread adoption of AR is not without challenges. High development costs, limited hardware options, and concerns about privacy and data security are significant hurdles. Moreover, creating seamless and intuitive AR experiences requires robust infrastructure and continuous advancements in software and hardware integration.

Despite these challenges, AR’s future is incredibly promising. With the advent of 5G networks and advancements in wearable devices, AR experiences are set to become more accessible and immersive. Industries like entertainment and tourism are already exploring AR to create unique, personalized experiences, further broadening its appeal.