Issue 2: Spring 2024

| Sl No. | Article | Author |

|---|---|---|

| 1 | Unlocking the Power of Computer Vision | Arghya Das (CSE/22/040) |

| 2 | Web3 And All The Hype Behind It | Mukutmani Das (CSE/20/114) |

| 3 | The Human Touch: Why AI Can't Replace Us in the Workspace | Rahul Kishore Paul (CSE/20/097) |

| 4 | Augmented Reality: The Past The Present and The Future | Aparannha Roy (CSE/20/048) |

| 5 | Artificial Intelligence: a boon? a bane? or a hoax? | Subhrangsu Das (CSE/23/115) |

| 6 | What’s Real About Virtual Reality? | Susmita Adhikary (CSE/23/172) |

| 7 | History of Cryptocurrency | Poulami Manna (CSE/23/030) |

| 8 | Trending Era of IoT | Somoshree Pramanik (CSE/22/027) |

| 9 | Feynman Technique: Revolutionizing Learning in the Tech World | Soubhik Banerjee (CSE/21/111) |

| 10 | Unlocking the Power of Natural Language Processing | Sudip Ghosh (CSE/21/112) |

Unlocking the Power of Computer Vision

Arghya Das (CSE/22/040)

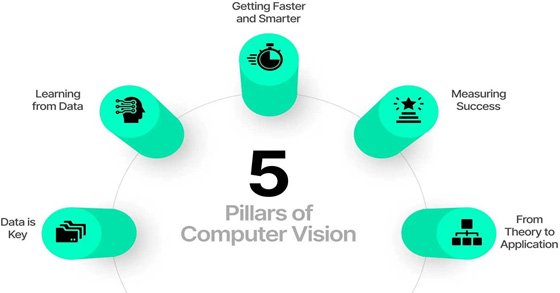

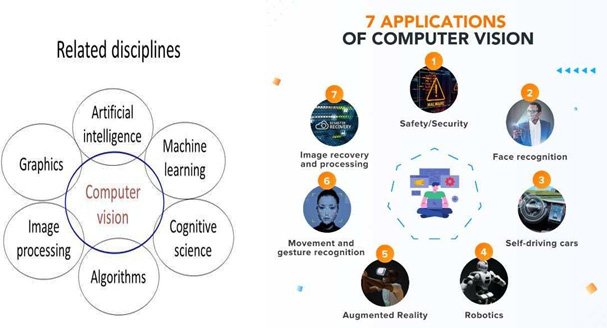

This article delves into its interdisciplinary nature, from image and video analysis to applications in autonomous vehicles, healthcare, and augmented reality. Witness how Computer Vision, integrating with machine learning, reshapes automation and paves the way for a future defined by enhanced visual perception and decision-making.

Computer vision in computer science engineering involves creating algorithms for machines to interpret and analyze visual data. It encompasses image processing, pattern recognition, and machine learning, enabling computers to understand and make decisions based on visual information.

Applied in fields like image analysis, object recognition, and autonomous vehicles, computer vision enhances automation and enables machines to perceive and respond to their surroundings.

Computer vision leverages a combination of image processing, pattern recognition, and machine learning to enable computers to make sense of the visual world. It is the bridge between the digital and physical realms, allowing machines to replicate and enhance the human ability to perceive and interpret visual data.

Some of the very well known algorithms used in computer vision are:

- Convolutional Neural Networks (CNN):

CNN has become a cornerstone in computer vision, particularly for tasks like image recognition and classification. - Support Vector Machines (SVM):

SVM is a robust algorithm used for image classification and segmentation. It works by finding the optimal hyperplane that best separates different classes in a high-dimensional space. - Haar Cascade Classifiers:

Haar cascade classifiers are utilized for object detection in images and video streams.

To understand Computer Vision, it's essential to grasp several key concepts and areas of knowledge, like -

- Image Basics: [Pixel,Resolution etc.]

- Image Processing: [Filters a Transformations etc.]

- Mathematics: [Linear Algebra Calculus etc.]

- Programming Skills

- Machine Learning: [Supervised and Unsupervised Learning, Training and Testing Data etc.]

- Neural Networks and Deep Learning: [Basic Neural Network Architecture, CNN etc.]

- Feature Extraction:[Edges, Corners, and Descriptors, etc.]

- Object Recognition:[Object Detection, Localization and Classification etc.]

- 3D Computer Vision:[Stereopsis and Depth Perception etc.]

1. Image and Video Analysis:

Computer vision finds extensive use in image and video analysis. From facial recognition in security systems to content moderation on social media platforms, the technology excels in extracting meaningful information from visual content.

2. Object Recognition and Tracking:

Industries such as manufacturing and logistics benefit from computer vision's prowess in object recognition and tracking. Automated warehouses utilize this technology to streamline inventory management and optimize logistics operations.

3. Autonomous Vehicles:

The advent of autonomous vehicles relies heavily on computer vision. Cars equipped with vision systems can interpret the surrounding environment, identify obstacles, and make real-time decisions, enhancing road safety and revolutionizing transportation.

4. Medical Imaging:

In healthcare, computer vision plays a pivotal role in medical imaging. From diagnosing diseases through radiology to assisting in surgical procedures, it contributes to more accurate and efficient healthcare outcomes.

5. Augmented and Virtual Reality:

Computer vision is a key enabler of augmented and virtual reality experiences. It allows devices to understand and interact with the user's environment, creating immersive and responsive virtual worlds.

Computer vision's integration with machine learning algorithms enables automation in various tasks. From industrial quality control to agricultural monitoring, machines equipped with vision systems can analyze data, make decisions, and execute tasks with precision.

As computer vision continues to advance, researchers are exploring novel applications and addressing challenges such as robustness in diverse environments, ethical considerations, and privacy concerns. The integration of computer vision with other emerging technologies, like edge computing and 5G, promises to unlock even greater possibilities.

In the realm of computer science, the rise of computer vision signifies a paradigm shift in how machines perceive and interact with the world. Its applications are broad and diverse, impacting industries ranging from healthcare to automotive. As researchers and engineers continue to push the boundaries of this technology, the future holds exciting possibilities for the integration of computer vision into our daily lives, shaping the way we work, communicate, and experience the world around us.

- Szeliski, R. (2010). "Computer Vision: Algorithms and Applications." Springer.

- Architecting Computer Vision Applications: From Concept to Deployment ( https://markovate.com/blog/computer-vision-applications/ )

- Machine Learning in Computer Vision ( https://blog.hireterra.com/machine-learning-in-computer-vision- 484cbd84cabf )

- Russakovsky, O., Deng, J., Su, H., Krause, J., Satheesh, S., Ma, S., ... & Fei-Fei, L. (2015). "ImageNet Large Scale Visual Recognition Challenge." International Journal of Computer Vision, 115(3), 211-252.

- Litjens, G., Kooi, T., Bejnordi, B. E., Setio, A. A. A., Ciompi, F., Ghafoorian, M., ... & Sánchez, C. I. (2017). "A survey on deep learning in medical image analysis." Medical image analysis, 42, 60-88.

Web3 And All The Hype Behind It

Mukutmani Das (CSE/20/114)

Web3 is being termed as the future of the internet. The vision for this new, blockchain-based web includes cryptocurrencies, NFTs, DAOs, decentralized finance, and more. It offers a read/write/own version of the web, in which users have a financial stake in and more control over the web communities they belong to.

Do you remember the first time you heard about Bitcoin? Maybe it was a faint buzz about a new technology that would change everything. Bitcoin now seems to be everywhere. Amidst all the demands on our attention, many of us didn’t notice cryptocurrencies slowly seeping into the mainstream. Crypto, however, is just the tip of the spear. The underlying technology, blockchain, is what’s called a “distributed ledger” — a database hosted by a network of computers instead of a single server — that offers users an immutable and transparent way to store information. Blockchain is now being deployed to new ends: for instance, to create “digital deed” ownership records of unique digital objects — or no fungible tokens (NFTs).

Put very simply, Web3 is an extension of cryptocurrency, using blockchain in new ways to new ends. A blockchain can store the number of tokens in a wallet, the terms of a self-executing contract, or the code for a decentralized app (dApp). Not all blockchains work the same way, but in general, coins are used as incentives for miners to process transactions. On “proof of work” chains like Bitcoin, solving the complex math problems necessary to process transactions is energy-intensive by design. On a “proof of stake” chain, which are newer but increasingly common, processing transactions simply requires that the verifiers with a stake in the chain agree that a transaction is legit — a process that’s significantly more efficient. In both cases, transaction data is public, though users’ wallets are identified only by a cryptographically generated address. Blockchains are “write only,” which means you can add data to them but can’t delete it.

Web3 and cryptocurrencies run on what are called “permissionless” blockchains, which have no centralized control and don’t require users to trust — or even know anything about — other users to do business with them.

The premise of 'Web 3.0' was coined by Ethereum co-founder Gavin Wood shortly after Ethereum launched in 2014. Gavin put into words a solution for a problem that many early crypto adopters felt: the Web required too much trust. That is, most of the Web that people know and use today relies on trusting a handful of private companies to act in the public's best interests.

Although it's challenging to provide a rigid definition of what Web3 is, a few core principles guide its creation.

- Web3 is decentralized: instead of large swathes of the internet controlled and owned by centralized entities, ownership gets distributed amongst its builders and users.

- Web3 is permissionless: everyone has equal access to participate in Web3, and no one gets excluded.

- Web3 has native payments: it uses cryptocurrency for spending and sending money online instead of relying on the outdated infrastructure of banks and payment processors.

- Web3 is trustless: it operates using incentives and economic mechanisms instead of relying on trusted third-parties.

Ownership - Web3 gives you ownership of your digital assets in an unprecedented way. For example, say you're playing a web2 game. If you purchase an in-game item, it is tied directly to your account. If the game creators delete your account, you will lose these items. Or, if you stop playing the game, you lose the value you invested into your in-game items.

Censorship Resistance - The power dynamic between platforms and content creators is massively imbalanced.

Decentralized autonomous organization (DAOs) - As well as owning your data in Web3, you can own the platform as a collective, using tokens that act like shares in a company. DAOs let you coordinate decentralized ownership of a platform and make decisions about its future.

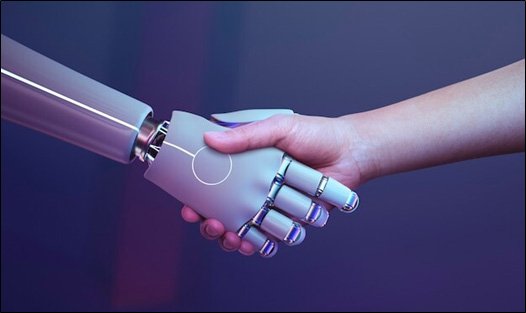

The Human Touch: Why AI Can't Replace Us in the Workspace

Rahul Kishore Paul (CSE/20/097)

The rise of artificial intelligence (AI) has raised a burning question in the minds of all professionals: “Will robots take our jobs?” While the capabilities of AI are undeniable, it may be a little premature to paint a dystopian picture of human aging. In reality, the future of work is not about replacing talent, but about complementing and enhancing our unique strengths.

Let's be honest, AI excels at tasks that make us yawn. From crunching numbers to churning out repetitive reports, AI can do it faster and more accurately than any human. Imagine a world where data analysis is instantaneous, freeing up accountants and analysts for higher-order problem-solving. AI-powered assembly lines can churn out products with laser-like precision, minimizing errors and boosting productivity.

But what about the tasks that require a spark of ingenuity, a dash of empathy, or a firm handshake? This is where humans reign supreme. AI can't replace the human ability to think outside the box, to generate fresh ideas, and to adapt to unforeseen circumstances. In a world increasingly driven by innovation, these are the skills that will keep us ahead of the curve.

Furthermore, the human touch remains irreplaceable in building relationships and navigating the complexities of social interaction. AI might be able to mimic human conversation, but it lacks the emotional intelligence and cultural nuances that make genuine connections. From client negotiations to team building, these are areas where humans will continue to hold the upper hand.

The future of work is not about humans vs. machines; it's about humans with machines. AI is a powerful tool that can be harnessed to augment our capabilities, not replace them. Imagine doctors using AI to diagnose diseases with greater accuracy, while still providing patients with the human care and compassion they need. Picture architects using AI to generate design options, but ultimately relying on their own creative vision to bring those designs to life.

This collaborative approach will unlock a new era of productivity and innovation. AI can handle the mundane, freeing us to focus on the meaningful. It can provide us with insights and data we never knew existed, empowering us to make better decisions. Ultimately, the future belongs to those who can leverage the power of AI while staying true to their uniquely human strengths.

So, can AI replace humans in the workspace? Not entirely. While AI will undoubtedly transform the nature of work, it will never replicate the full spectrum of human capabilities. The key lies in embracing AI as a partner, not a competitor. By harnessing its power while staying true to our own strengths, we can create a future of work that is not only efficient, but also fulfilling, creative, and profoundly human.

Augmented Reality: The Past The Present and The Future

Aparannha Roy (CSE/20/048)

Augmented reality has come a long way from science-fiction concept to a science-based reality. Until recently the costs of augmented reality were so substantial that designers could only dream of working in design projects that involved it – today things have changed and segmented reality is even available on the mobile handset. That means design for augmented reality is now an option for all shapes and sizes of UX designers.

Augmented reality is a view of the real, physical world in which elements are enhanced by computer generated input. These inputs may range form sound to video, to graphics to GPS overlays and more. The first conception of augmented reality occurred in a novel by Frank L Baum written in 1901 in which a set of electronic glasses mapped data onto people; it was called a “character marker”. Today, augmented reality is a real thing and not a science-fiction concept.

The seeds of augmented reality(AR) were sown surprisingly early, back in the 1900s!

- In 1901, "The wonderful wizard of OZ", L. Frank Baum describes spectacles that project information onto people’s faces, hinting at the concept of AR.

- In 1957, Moron Heilig invents the Sensorama, a machine that simulates multi-sensory experiences, laying the groundwork for immersive technologies like AR. Of course, it wasn’t computer controlled but it was the first example of an attempt at adding additional data to an experience.

- In 1968, Ivan Sutherland, often called the “father of computer graphics,” developed the “Sword of Democles,” a clunky head-mounted display that overlays computer graphics onto the real word, making the birth of AR technology.

- From 1974, AR took a flight and Myron Kruger creates Videoplace, a room-sized AR environment where users interact with virtual Objects.

- In 1990, The term “augmented reality” is finally coined by Boeing researcher Tom Caudell.

- In 1992, Louis Rosenburg develops “Virtual Fixtures,” one of the first practical AR systems used for training aircraft mechanics.

- From 2000, it started to reach in the public. AR starts appearing in entertaining and gaming, with games like AR pong and eye toy. In this year, Bruce Thomas developed an outdoor mobile AR game called ARQuake. ARToolkit (a design tool) being made available in adobe Flash in 2009. Google announcing its open beta of Google Glass (a project with mixed successes) in 2013. Microsoft announcing augmented reality support and their augmented reality headset HoloLens in 2015.

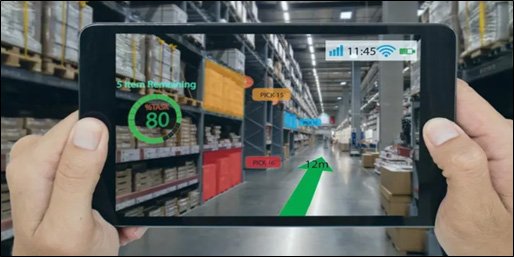

Augmented Reality(AR) has moved far beyond the realm of science fiction and is rapidly transforming our world. Today, AR isn’t just about Pokemon Go or funky Snapchat filters. It’s infiltrating various industries and enriching our daily lives in exciting ways. Let’s delve into the current state of play in AR:

Gone are the days of clunky head-mounted displays. Smartphones, with their powerful processors, cameras and sensors, have become the primary gateway to AR experiences. Platforms like Apple’s Arkit and Google’s ARCore empower developers to create compelling AR apps readily accessible to millions.

AR is still making waves in the gaming world, with titles like Pokemon Go and The Witcher: Monster Slayer pushing the boundaries of location-based gaming. Imagine exploring your neighborhood and encountering fantastical creatures or landmarks from your favorite games!

AR is revolutionizing education by bringing abstract concepts to life. Students can dissect virtual frogs, explore historical sites in 3D, or even practice surgery on holographic patients.

Imagine trying on clothes virtually before stepping into a store or visualizing how furniture would look in your living room before buying it. AR is transforming the retail industry, offering enhanced product visualization and personalized shopping experiences.

AR is streamlining complex tasks in manufacturing and maintenance. Technicians can overlay instructions onto machinery, troubleshoot issues remotely, and even receive real-time guidance from experts’ miles away.

AR is assisting surgeons in visualizing organs during surgery, helping patients understand medical procedures, and even offering rehabilitation therapy through interactive games.

Augmented reality (AR) is rapidly evolving, blending the physical and digital worlds in exciting ways. But like emerging technology, it faces challenges that must be overcome to unlock its full potential. Let’s dive into the hurdles and the bright future of AR:

- Hardware Limitations:

Current AR devices, primarily smartphones, struggle with battery life, processing power, and field of view. Imagine a bulky phone headset limiting your movement or running out of juice mid-exploration. - Software and Content Creation:

Developing high-quality, engaging AR experiences requires specialized skills and tools. Creating realistic virtual objects that seamlessly interact with the real world is no easy feat. - User Experience:

AR interfaces need to be intuitive and natural, minimizing friction and cognitive load. Clunky controls or confusing menus can quickly break the immersion. - Privacy and Security Concerns:

AR raises concerns about data collection and potential misuse of personal information. Ensuring user privacy and responsible data handling is crucial. - Ethical Considerations:

The blurring lines between reality and virtuality raise ethical questions about addiction, manipulation, and social impact. Responsible development and use of AR are paramount.

Jessica Lowry, a UX Designer, writing for the Next Web says that AR is the future of design and we tend to agree. Already mobile phones are such an integral part of our lives that they might as well be extensions of our bodies; as technology can be further integrated into our lives without being intrusive- it is a certainly that augmented reality provides opportunities to enhance user experiences beyond measure.

This will almost certainly see major advances in the much -hyped but still little seen; Internet of Things. UX designers in the AR field will need to seriously consider the questions of how traditional experiences can be improved through AR- just making your cooker capable of using computer enhancements is not enough; it needs to healthier eating or better cooked food for users to care.

The future will belong to AR when it improves task efficiency or the quality of the output of an experience for the user. This is the key challenge of the 21st century UX profession.

AR or augmented reality has gone from pipe dream to reality in just over a century. There are many AR applications in use or under development today, however – the concept will only take off universally when UX designers think about how they can integrate AR with daily life to improve productivity, efficiency or quality of experiences. There is an unlimited potential for AR, the big question is - how will it be unlocked?

- Did L. Frank Baum Predict Augmented Reality or Warn Us About Its Power? ( https://www.inverse.com/article/18146-l-frank-baum-the-master-key-augmented-reality-futurism )

- Eye Am a Camera: Surveillance and Sousveillance in the Glassage (https://techland.time.com/2012/11/02/eye-am-a-camera-surveillance-and-sousveillance-in-the-glassage/ )

- Augmented reality is the future of design (https://thenextweb.com/news/augmented-reality-is-the-future-of-design )

Artificial Intelligence: a boon? a bane? or a hoax?

Subhrangsu Das (CSE/23/115)

In the current era of advanced computing and technological advancements, AI i.e. Artificial Intelligence is quite a hot topic and has been roaming the headlines since the release of ChatGPT in public domain. And of course there’s a mixed emotion and opinion about the same with a lot of allegations and validations given to its name. In my article I’d like to present my opinions backed by deduced logic and facts which makes them hard to ignore. As the saying goes “Beauty beholds in the eyes of the beholder”, I’d like to apprehend both the positive and negative sides of it and also a midway of them making it easier for both parties to understand each other.

Let’s start with a positive approach, since the popularization of AI in recent times it really had been solving the obstruct problems and creating a new approach to solve problems innumerous times. We can’t deny the fact that this usefulness has been catering a huge patch of people from various work fields such being Students, Freelancers, Researchers and many other people. Though the term Artificial Intelligence is not new and refers to any piece of machine work which can replicate human sense of understanding in the slightest manner makes it date back to a lot back than you would expect. Even a software as simple as image recognition is an AI program as it replicates the human sense of seeing. A speech and voice recognition program which transcribes, translates and searches with just a voice sample is an excellent example of AI which the mass has been using for a long time. Keeping these in mind there’s no doubt that AI has been easing out our lives in every possible manner. The current state at which AI has been improving and been on research terms is being evolving into a complete experience of a human being and replicating its intelligence of giving us the answers we seek from professionals at an individual and personal level i.e. replace the existing professionals with limited knowledge with machines which do it better and have endless storage to learn new things. This leads to cost cutting at different levels for an institution to be more profitable. Or for anyone who has started a business or an organisation on a personal scale AI is an excellent alternative for them to carry out small miscellaneous tasks which aren’t significant enough to spend money or hire extra workers for. So it’s very clear that AI has a deep positive impact on the people and works more like an assist to everyday job to every group of people.

Now, moving on to the darker side of things or as people say about AI, we need to address the most significant fact that makes people fear or more like hate AI in the current era which is being an replacement of the masses which leads to more unemployment among the youth and how AI can efficiently replace us saving cost, time and resources. An independent research from an organization named “ResumeBuilder” including 750 business leaders was conducted and published in November 2023 reveals that 37 percent of them believe that AI will be replacing jobs in 2024 due to its increased efficiency and cost effectiveness while the employees themselves admit that at least 29 percent of their tasks can completely be handled by AI alone and 40 percent of the employers are ready to replace their employees.

So this is what the news headlines are about and they actually exaggerate these figures to create a sense of fear while there’s more to it than rather just numbers. The same research by “ResumeBuilder” actually also states that 96 percent of the employers look for workers with an AI upper hand i.e. the ability to converge AI with their skills and blend that into their becoming self-efficient and error free, rather than completely relying on AI automation to do their job. Elon Musk, the founder and CEO of SpaceX, Tesla, Twitter and OpenAI the company which developed the ChatGPT, himself said in an interview that AI presently cannot make you lose your job but a person using AI to hone and sharpen his skills definitely can, clearly implying that AI is currently just a tool, but of course a very powerful one. Even if we don’t consider a person’s sole words and opinion, and look for the stats and the tests it also drives us to the same conclusion. Artificial Intelligence being in an attempt to become more human like gets itself applied to some proverbs too i.e. “You are what you eat”. Well this was my view on how I view it and let me explain how. The way in which AI is being developed to give an exact result or output on giving just a minimal prompt is by feeding the system with heaps of data. We re talking millions of petabyte amounts of data, it may be photos, videos, research papers, texts, scanned manuscripts, existing articles and many more and even live human interaction to understand what a user expects. So we can safely say that it gives us answers from what it had been feed. That’s why, though one of its major key factors and said to be neutrality it actually can be manipulated easily by feeding the wrong data or biased data for someone which leads to total eradication of its neutrality feature. Basically it can be deduced that AI cannot be trusted to take decisions on any matter, human conscience is what it lacks and makes us superior and neither can single handedly do a job which is crucial enough in day to day lives. And this is all that we can now conclude from the current situation, controversies and opinion and is very much dependent on time and what may happen in future. These may change according to our behavior to the situation.

What’s Real About Virtual Reality?

Susmita Adhikary (CSE/23/172)

As usual with infant technologies, realizing the early dreams for virtual reality (VR) and harnessing it to real work has taken longer than the initial wild hype predicted. Now, finally, it's happening.

In his great invited lecture in 1965, "The Ultimate Display," Ivan Sutherland laid out a vision (see the side bar), which I paraphrase:

Don't think of that thing as a screen, think of it as a window, a window through which one looks into a virtual world. The challenge to computer graphics is to make that virtual world look real, sound real, move and respond to interaction in real time, and even feel real.

This research program has driven the field ever since.

What is VR? For better or worse, the label virtual reality stuck to this particular branch of computer graphics. I define a virtual reality experience as any in which the user is effectively immersed in a responsive virtual world. This implies user dynamic control of viewpoint.

VR almost worked in 1994. In 1994, I surveyed the field of VR in a lecture that asked, "Is There Any Real Virtue in Virtual Reality?" My assessment then was that VR almost worked that our discipline stood on Mount Pisgah looking into the Promised Land, but that we were not yet there. There were lots of demos and pilot systems, but except for vehicle simulators and entertainment applications, VR was not yet in production use doing real work.

This personal assessment of the state of the VR art concludes that whereas VR almost worked in 1994, it now really works. Real users routinely use production applications.

Net assessment-VR now barely works. This year I was invited to do an up-to-date assessment of VR, with funding to visit major centers in North America and Europe. Every one of the component technologies has made big strides. Moreover, I found that there now exist some VR applications routinely operated for the results they produce. As best I can determine, there were more than 10 and fewer than 100 such installations as of March 1999; this count again excludes vehicle simulators and entertainment applications. I think our technology has crossed over the pass-VR that used to almost work now barely works. VR is now really real.

Why the exclusions? In the technology comparison between 1994 and 1999, I exclude vehicle simulators and entertainment VR applications for different reasons.

Vehicle simulators were developed much earlier and independently of the VR vision. Although they today provide the best VR experiences available, that excellence did not arise from the development of VR technologies nor does it represent the state of VR in general, because of specialized properties of the application. Entertainment I exclude for two other reasons. First, in entertainment the VR experience itself is the result sought rather than the insight or fruit resulting from the experience. Second, because entertainment exploits Coleridge's "willing suspension of disbelief," the fidelity demands are much lower than in other VR applications.

Four technologies are crucial for VR.

- The visual (and aural and haptic) displays that immerse the user in the virtual world and that block out contradictory sensory impressions from the real world;

- The graphics rendering system that generates, at 20 to 30 frames per second, the ever-changing images;

- The tracking system that continually reports the position and orientation of the user's head and limbs; and

- The database construction and maintenance system for building and maintaining detailed and realistic models of the virtual world.

History of Cryptocurrency

Poulami Manna (CSE/23/030)

After the Second World War, the international monetary system underwent a significant transformation. The Bretton Woods system was established in 1944, which created the International Monetary Fund (IMF) and the International Bank for Reconstruction and Development (World Bank). In 1971 the system effectively collapsed when the US govt. suspended convertibility of dollars into gold for other central banks. From then the government & the bank became powerful.

In 1983, American cryptographer David Chaum conceived of a type of cryptographic electronic money called ecash. Later, in 1995, he implemented it through Digicash, an early form of cryptographic electronic payments.

But, the Global Financial Crisis (GFC) of 2008, was the most severe worldwide economic crisis since the Great Depression. A continuous buildup of toxic assets in the form of subprime mortgages purchased by Lehman Brothers ultimately led to the firm's bankruptcy in September 2008. After that in January 2009, “Bitcoin” the first truly decentralized digital currency was mined by pseudonymous developer Satoshi Nakamoto. A cryptocurrency is a digital currency designed to work as a medium of exchange through a computer network that is not reliant on any central authority, such as a government or bank, to uphold or maintain it. Bitcoin was first released as open-source software in 2009. As of June 2023, there were more than 25,000 other cryptocurrencies in the marketplace, of which more than 40 had a market capitalization exceeding $1 billion.

Within a proof-of-work system such as Bitcoin, the safety, integrity and balance of ledgers is maintained by a community of mutually distrustful parties referred to as miners. Miners use their computers to help validate and timestamp transactions, adding them to the ledger in accordance with a particular timestamping scheme.

Some people use cryptocurrency as an investment while very few use it as an alternate currency. But it can’t be used as alternate currency because of slower transaction speed (10 min.), high volatility and potential for large losses. But at the same time it can be used for foreign transfer, while bank transfer takes 1-2 days and charges a lot of money.

In 2020 PayPal opened crypto services to millions of eligible users in US. JP Morgan has opened crypto exchanges like Coinbase & Gemini trust. In April 2018, the RBI had banned the crypto industry from banking system due to arise of some fraud crypto scam like Amit Bhardwaj’s ‘Gain Bitcoin’ & as a result some Indian crypto exchanges shut down. On January 2020 Indian Supreme Court lifted the ban & under article 19(1)(G) of Indian Constitution, it is the fundamental right of every citizen to indulge in any business or trades. On 4th March 2020 , the court clearly declared that there is no legal prohibition on cryptocurrency trading & investment. Overall, cryptocurrencies can play important role in future of finance.

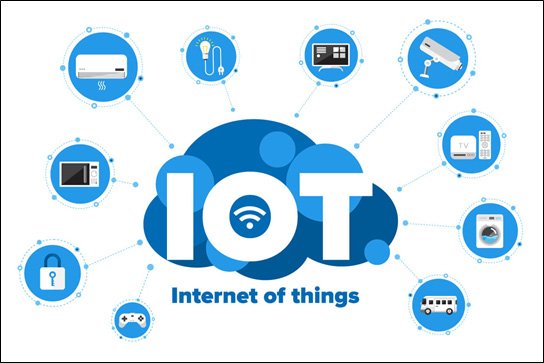

Trending Era of IoT

Somoshree Pramanik (CSE/22/027)

Technology is playing a major role in our lives. Not only it has changed our lifestyle but also made it comfortable. Internet of things or IoT is such an aspect. It simply means the network of devices that are able to share and receive data and information with other devices via using the gadgets. The things in the IoT should be well equipped with sensors, software and machine learning techniques.

These devices are architecture, that’s a combination of several technologies. This kind of devices have sensors embedded in them so that it can sense whatever is happening in the environment. Internet connection should be strong with the other devices so that sending and receiving of data becomes convenient. Machine learning techniques and advanced analytics will help the devices to collect maximum data in very less time. If AI application (Alexa, Siri) will be done so it’s going for a smooth use.

Digitalization is taking place in world so we’ve to move with a very fast pace. So, the network of smart device is highly necessary for a good interaction between devices and human being to make their work easy and comfortable. The development of these smart devices is not only useful for household works but also in commercial sectors. In manufacturing sectors, the machines are connected with each other so that they can be developed with good connections that’ll help to foster production and to boost up the customer’s experience. This device has the best impact on the healthcare centers as it’ll monitor different activities of patients daily.

There, it alerts doctors for the early possible diagnosis of the patient. The introduction of GPS has really reduced the difficulty in locating the exact place.

Smart appliances in the home enables us to carry things with great ease. Growth and production of different crops, soil parameters in the agricultural field for their further analysis, this kind of advanced technology becomes blessing. As sharing of different information is taking place, chances of hacking some important news are taking places too. Even many institutions or enterprises are concerned with many different businesses where many things are connected with IoT.

So, it’ll be quite difficult for them to collect information on a vast note. Many devices are not compatible of connecting with other devices. In some cases, network issues become a part of its disadvantages. Cost is also the central problem as many can’t afford it. The Internet of Things has a good potential in the future but a few challenges need to be addressed to make it a successful technology in the near future.

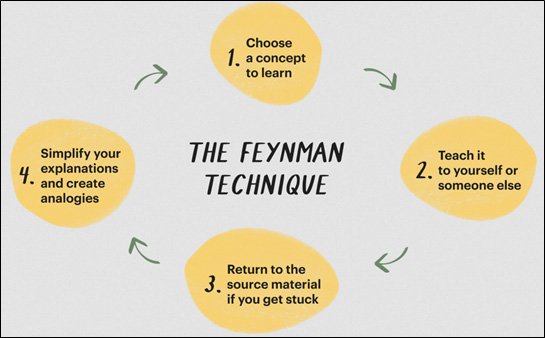

Feynman Technique: Revolutionizing Learning in the Tech World

Soubhik Banerjee (CSE/21/111)

Do you face challenges on understanding things? Then this article is for you.

In the landscape of technological education, where complex concepts and rapid advancements are the norm, the Feynman Technique emerges as a beacon of clarity and simplicity. Named after the renowned physicist Richard Feynman, known for his extraordinary teaching skills, this method is not just a study technique but a revolutionary approach to learning.

At its core, the Feynman Technique is based on the idea that understanding is the foundation of knowledge. It's not about rote memorization or cramming information; it's about truly grasping the subject matter. This technique involves four key steps: choosing a concept, teaching it to someone else, identifying gaps in understanding, and reviewing and simplifying.

The first step is to select a concept or topic, ideally something complex, and study it thoroughly. The second step, which is the crux of the method, is to explain it in simple terms, as if teaching it to someone who has no prior knowledge of the subject. This is where the magic happens. In trying to simplify the concept, one must distill it to its purest form, shedding any jargon or unnecessary complexity.

The third step is to identify areas where your understanding is lacking. This is crucial in the tech world where concepts can be intricate and multifaceted. By pinpointing these gaps, you're able to focus your learning where it's most needed.

Finally, the last step is to review and simplify further. This iterative process not only deepens understanding but also aids in retention. It’s a method that promotes active learning, as opposed to passive reading or listening, making it especially effective in the fast-paced, ever-evolving field of technology.

In the context of a tech college, where students often grapple with abstract and challenging material, the Feynman Technique can be a game-changer. It fosters a deeper comprehension, encourages independent thinking, and ultimately, empowers students to become better learners.

The beauty of the Feynman Technique lies in its simplicity and versatility. It can be applied to virtually any field, but it's particularly beneficial in technology education, where clarity and understanding are paramount. By embracing this method, students and educators alike can transform the way they approach learning, making it a more effective, enjoyable, and rewarding experience.

nlocking the Power of Natural Language Processing

Sudip Ghosh (CSE/21/112)

In the dynamic world of technological advancements, Natural Language Processing (NLP) emerges as a revolutionary force, seamlessly bridging the divide between human linguistic expression and computerized comprehension. As a specialized domain within artificial intelligence, NLP equips machines with the unprecedented capability to understand, interpret, and respond to human language, surpassing the confines of mere syntax analysis.

At the heart of NLP lies its commitment to endow machines with an understanding of the subtleties of natural language. This endeavor enables a more human-centric interaction, as machines learn to navigate the complexities of language, encompassing semantics, sentiment, and contextual meaning. The applications of NLP are diverse and expansive, evident in the widespread use of virtual assistants, chatbots, and language translation services.

A key feature of NLP is its proficiency in processing unstructured data, such as text and speech. This capacity translates into a powerful tool for extracting meaningful insights. Sentiment analysis, for example, allows companies to tap into public opinion regarding their products or services, thereby informing strategic decisions. Moreover, machine translation, driven by NLP algorithms, paves the way for global connectivity by overcoming linguistic barriers.

The rapid evolution of NLP can be attributed to significant breakthroughs in deep learning and neural networks. These advanced technologies empower machines not only to comprehend language but also to learn and evolve from linguistic interactions, thereby continually enhancing their performance.

In the context of today's digital revolution, NLP stands out as a critical instrument. It enriches user experiences, streamlines task automation, and nurtures a more organic and intuitive dialogue between humans and machines. The ongoing refinement and integration of NLP into various platforms emphasize its vital role, heralding a future where fluid communication between humans and technology is not just a possibility but a commonplace reality.

Archive

Download PDF

Departmental Links

Issue 3

Issue 3 Issue 2

Issue 2 Issue 1

Issue 1